Neural Networks Extrapolate is a machine learning model used to find trends and make predictions based on data.

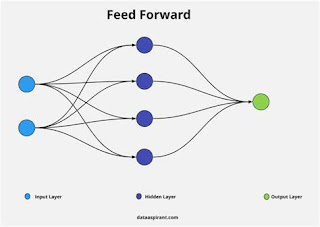

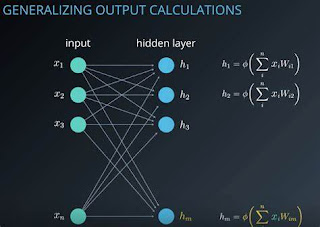

One of the best things about neural networks is that they can make predictions beyond the data they were caught on. This is done using feedback loops and other methods that let the network learn and change over time. At a high level, neural networks take data as input and send it through some layers, each of which does something different with the data in math. The output of one layer goes into the next layer, and so on, until the final output of the network is reached.

During training, the network is given a set of input data and the related output values. It then adjusts the weights and biases of each layer to reduce the difference between its predicted output and the actual output. This process is done often, and the network gets better at using the training data each time. Feedback loops help with this. Feeding the network’s output back into itself can improve its predictions based on how the output has changed in previous rounds.

Extrapolation in Feedforward Neural Networks

Extrapolation in feedforward neural networks is the ability of a neural network to make guesses for input values outside the range of the training data. Feedforward neural networks are usually taught on a set of input-output pairs. The inputs are fed into the network, and the outputs are used to change the weights and biases of the network. During training, the network learns how to map the input data to the output data, and the goal is to make the difference between the predicted output and the real output as small as possible.

. The network needs to learn how to generalize new information. Extrapolation is an important feature of neural networks because it lets the network make predictions for inputs outside the training data range. This is especially important for real-world uses where the input data can be very different, and the network needs to make accurate predictions even for input values that weren’t in the training data.

Extrapolation in Recurrent Neural Networks

Extrapolation in Recurrent Neural Networks (RNNs) is the ability of these models to make predictions or results outside of the range of their training data. RNNs type of neural network that can handle sequential data because they have an internal state, or “memory,” that remembers the context of past inputs. When an RNN is trained on a data series, it learns to change its internal state based on the current input and the previous state.

Extrapolation in RNNs can be hard because the model must learn to guess values that are outside of the range of its training data. To do this, the model must generalize well and find the underlying patterns in the data instead of just remembering the training cases. One way to improve RNNs’ extrapolation ability is to use regularization or dropout methods. Which helps stop the network from becoming too similar to the training data. Another option is to use more complex designs, like long short-term memory (LSTM) or recurrent gated unit (GRU) networks, better at finding long-term dependencies in the data.

Read More ON: How Neural Networks Extrapolate: From Feedback to Graph Neural Networks

Extrapolation in Graph Neural Networks

Extrapolation in Graph Neural Networks (GNNs) means the model can make accurate predictions for inputs outside the training data range. In other words, GNNs that are good at extrapolation can generalize well and make good guesses about data points they have yet to see. For example, graph convolutional networks (GCNs) use a fixed, predefined neighborhood around each node to collect information from nearby nodes. This can cause the model to fit too well into the training data and do poorly on data it has yet to see. Some researchers have suggested using attention mechanisms to give more weight. The inputs of neighboring nodes are based on their importance to the target node.

Adding to or changing the graph during training is another way to help improve projection. Which can help the model learn to work with data it has never seen before. This made it easier for the model to work on new graphs. Overall, improving extrapolation in GNNs is an important area of study. That can help make these models more useful in many real-world situations. Such as drug discovery, social network analysis, and recommendation systems.

Also, Read this: How Neural Networks Extrapolate: From Feedback to Graph Neural Networks

The Importance of Extrapolation in Machine Learning

Extrapolation is a key part of machine learning because it lets us make guesses about data we haven’t seen before. This is especially important when it’s not possible or reasonable to collect a lot of labeled data, like when a doctor is trying to figure out what’s wrong with a patient or when the environment is being watched.

Extrapolation is also necessary for intelligent agents to work in complex, changeable environments. Where new knowledge is always being made. For example, self-driving cars must be able to use what they’ve learned from driving in the past to make safe and effective decisions in new driving situations.

How to extrapolate a graph?

Extrapolation is the process of going beyond the data points on a graph. It is used to guess how a function will act outside of the range of data that has been collected. Extrapolation can help predict future trends or values. But it can also be dangerous if the ideas it is based on are wrong.

Here’s how to calculate from a graph:

Find the functional connection. You need to know how the variables function together to extrapolate a graph. You should know if the link is linear, quadratic, exponential, or other.

Find out the domain and range. Find out what the function’s domain and range are. The domain is the set of all possible values for the independent variable, and the range is the set of all possible values for the dependent variable.

Choose an extension method. Different extrapolating ways exist, such as linear, quadratic, and exponential. The choice of method is based on the relationship between the functions and the data that is accessible.

Calculate the parameters: Use the known data points to determine the function’s parameters. This means getting the slope, the intercept, and other important coefficients.

Extrapolate the function: Once you know the functional relationship and the factors. You can use them to extrapolate the function beyond the range of the data. This means using the function’s equation to determine

Look at the results:

- Look at the extrapolation results.

- Check to see if your ideas are correct and if the results make sense.

- Be aware of what projection can’t do, and don’t make predictions that are too different from what you’ve seen.

Extrapolation in Python:

Extrapolation is guessing numbers outside a given range or set of known data points. There are different ways to do projection in Python.

Use the numpy library’s polyfit function to fit a polynomial equation to the known data points. Then use the resulting equation to guess values for points outside the known range. Here’s an example snippet:

Import numpy as np

# Define some sample data

x = np.array([1, 2, 3, 4, 5]) y = np.array([5, 10, 15, 20, 25])

# Fit a quadratic equation to the data p = np.polyfit(x, y, 2)

# Use the equation to extrapolate values for x > 5

x_extrapolated = np.array([6, 7, 8, 9, 10])

y_extrapolated = np.polyvalent(p, x_extrapolated) print(y_extrapolated)

This will give you an array of extrapolated y values for x numbers outside the original data’s range.

Note that extrapolation can be unreliable. If the relationship between the variables being studied changes greatly outside the range of the known data. It’s always important to be careful and check extended results against real data.

conclusion

Neural networks are a useful tool for extrapolation in many fields, such as natural language processing, computer vision, and scientific modeling. By learning from big datasets, Neural networks can find patterns and relationships. That allows them to make predictions and create new data beyond the original training set. But the accuracy of the extrapolation relies heavily on the quality and representativeness of the training data. As well as the architecture and parameters of the neural network. So, it’s important to carefully build and test. The neural network models and keep improving them as more data comes in. Neural networks can change many areas of study and business. Their performance constantly improves. This is because they can extrapolate information accurately and quickly.